Is your server overloaded? Is your busy website finding hard to get a response? Load balancing can be one stop solution to avoid such bottlenecks. As the term defines, its all about distributing workloads across multiple resources and no single device is overwhelmed.

In the case of servers load balancing is the method of distributing workloads across multiple resources. These resources can be web servers, DNS clusters, Networks, CPU and Disk drives. The main aim of load balancing is to optimize resource usage, with minimum response time and keeping low load in order to achieve maximum throughput.

Load balancing aims to optimize the resource usage, with a minimum response time and keeping load reduced to achieve maximum throughput. It focus on distribution of resource like web servers, DNS clusters, Networks, CPU or Disk drives to increases reliability via redundancy. This can be achieved through either software or Hardware load balancing.

Load balancing can be implemented in multiple components. Load balancing increases reliability via redundancy. Load balancing can be achieved via load balancing software or Hardware. The load balancer is the server or component that provides loading balancing service. Load balancer distributes the work load among the set of components or servers under it ie, method of balancing load among set of websites or across a set of web servers. The load balancer server accepts all the incoming requests and chooses one server from the set of servers and that particular server will serve that request. The load balancer server chooses the server depending upon the load balancer algorithm. When my web server had busy traffic and there by creating bottlenecks, I had three option to overcome it:-

- An upgrade – Replace the server with high end hardware and hope the server is fast enough to handle the load.

- Migration – Move the websites that are having high access to separate servers.

- Load balancer – Balance the traffic load across multiple mirrored servers. For most organizations, load balancing is the clear choice.

I would prefer the best reliable choice, a load balancer.

The main concern we might have while implementing load-balancer is handling websites with session and cookies. When a user connects to a website for the first time, a session is created on the server to which the user was directed. If the site is load balanced and the user is directed to a second server on the next request, a new session is created. What we need is some technique to ensure that a user is reconnected to the same server every time they make a request on same website for keeping the same session data.

As many of you may already know, cookies are stored in the user’s browser while session data are stored on server. Session and cookies work together to create illusion of persistence in every connections to the same website.

Types of load balancers:

- Software Load Balancer

- Hardware Load Balancer

In this article I would like to explain the load balancing concepts and scenarios on server side. While discussing various load balancing concepts DNS load balancer is the most simplest, also known as poor mans load handling technique. Since it is the most widely used technique, let me explain on the same.

DNS load balancer

In dns based load balancers the balancing is performed by DNS server. Round robin DNS is most popular technique used in dns based load balancers. Each dns record is assigned to more than one IP address. When a request is made for the website, DNS rotates through the available IP addresses in a loop depending upon the algorithm used to share the load. Typically known as “poor man’s” load balancing solution.

root@$ [/var/named]# vi xielestest.com.db

Append/modfiy www entry:

xielestest.com IN A 198.168.1.1

IN A 198.168.1.2

IN A 198.168.1.3

www IN A 198.168.1.1

IN A 198.168.1.2

IN A 198.168.1.3

Save and restart bind/named service in the server.

Now let us see what happens with dns load balancer. When a query is made for xielestest.com, it will first give the IP of 198.168.1.1 for website. The next time a request is made for the IP it will return 198.168.1.2 for website and so on. The order in which IP addresses from the list are returned will be based on the round robin algorithm.

While above example was based on DNS based load balancing for web servers, we can balance mail server and FTP servers as well in same method. DNS rotates through the available IP addresses in a circular way to share the load. Further more, we can control the order of round robin using “rrset-order” in DNS. The rrset-order defines the order in which multiple records of the same type are returned.

rrset-order{ order_spec ; [ order_spec ; … ]

fixed – records are returned in the order they are defined in zone file

random – records are returned in a random order

cyclic – records are returned in a round-robin fashion

Adding an example with rrset-order

rrset-order {

Any IN type A www order cyclic;

};

Pros and Cons of DNS load balancer

+ Inexpensive and easy to set up.

+ DNS round robin is excellent to increase capacity, by distributing the load across multiple points.

+ Simplicity, It does not require any networking experts to set up or debug the system in case a problem arises.

– If one of the hosts becomes unavailable, the DNS server does not know this, and will still continue to give out the IP of the downed server.

– IP address can be cached by a client.

– Cannot implement this method for SSL enabled websites.

– Unable to handle session and cookie enabled websites.

– Dns load balancing is connection based its not a load balanced solution.

Web server based load balancing

To overcome “Single Point Of Failure” based troubles and disadvantages with dns based balancing, a web server based load balancing can greatly help. Web server based load balancer manage the load on the web server nodes and route the requests to the node with less load. It also take care of sessions as well as connections.

Let us proceed with an example of setting up a load balancer for two web servers which serves database from independent database server. The requirements will be as follows:

- 1x Load Balancer server

- 3x Web Servers

- 1x MySQL server

We have different methods to adopt the goal. Here I would elaborate about open source load balancing methods such via Nginx or HAproxy:-

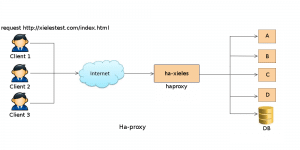

Load balancing via HAproxy:- High availability proxy (HAproxy) is a load balanced proxy server that distributes work load among a set of servers. It can load balance any tcp service. HAproxy based load balancing supports round-robin, least connection and weighted algorithms with session persistence. This is a fast load balancing solution that works efficiently utilizing very low CPU resource. In HAproxy any configuration changes can be made with out braking existing connections. HAproxy analyzes both request and the response and indexes each. It is capable of providing both httpd and tcp load balancing. HAproxy supports wide range of load balancing algorithms such as round robin, static round robin, least connection, source etc. It has configuration stored in /etc/haproxy.cfg. When HAproxy receives a client request, if the request does not contain a cookie, it will be forwarded to a valid server. The server which handles the request will insert a cookie “SERVERID” in the response. When the client comes again with the cookie “SERVERID”, Haproxy will forward the request to same “SERVERID” server. Finally, if the server with “SERVERID” dies, the requests will be sent to another live server and a cookie will be reassigned. However, Haproxy cannot handle SSL enabled websites.

Working haproxy (ha-xieles)

client browser request http://xielestest.com/index.html

listen “ha-xieles” 198.168.1.1:80 -> load bancer server “ha-xieles” recevies the request

mode http

balancing algorithm “roundrobin”

cookie “SERVERID” insert indirect

option “httpchk” HEAD /index.html HTTP/1.0 -> check if index.html page exists

server webA 192.168.1.11:80 cookie A check

server webB 192.168.1.12:80 cookie B check

server webC 192.168.1.13:80 cookie C check

server webD 192.168.1.14:80 cookie D check

- ha-xieles will receive clients requests.

- If the request does not contain a cookie, it will be forwarded to server based on algorithm defined.

- If cookie “SERVERID” will be inserted in the response holding the server name (eg: “A”). when the client comes again with the cookie “SERVERID=A”, “ha-xieles” identify that and request will forwarded to server A.

- If server “webA” dies, the requests will be sent to another valid server and a cookie will be reassigned.

Pros of using HAproxy

+ High availability proxy.

+ High single server performance.

+ Mysql connections can also be load balanced.

+ Website that requires sessions can be handled.

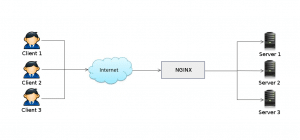

Load balancing via Nginx: NGinx can be used as a reverse proxy to load balance HTTP requests among back-end servers. Nginx proxy works works with same round robin algorithm. The Round Robin algorithm sends visitors to one of a set of IPs. For configuring the server as Nginx load balancer to control child web servers, you should have nginx installed on your server.

You can install nginx on centos servers using yum command.

[root@server]# yum install nginx

Upstream Module:- This directive describes a set of servers, upstream which defines the nodes within the load balanced cluster.

Syntax: upstream name { … }

Open up your website’s configuration file on /etc/nginx/sites-available/xielestest.com and add as below.

upstream xieles {

server host1.xielestest.com;

server host2.xielestest.com;

server host3.sielestest.com;

}

In the above configuration we have upstream name as “xieles”. We have 3 nodes with web servers. Further, you are informing the “virtual host” about this upstream.

server {

location / {

proxy_pass http://xieles;

}

}

Once the changes are made, restart Nginx.

/etc/init.d/nginx restart

The above conf we defined is a simple proxy conf. While expecting maximum output, we have to apply different directives in nginx proxy conf. These directives are used to enhance routing site visitors more effectively. Let us know see few such directives:

- Weight:- Nginx “weight” directive allows to assign the number of requests that need to be handled by each host.

upstream xieles {

server host1.xielestest.com weight=1;

server host2.xielestest.com weight=2;

server host3.sielestest.com weight=6;

}

With a weight of 2, host2.xielestest.com can send twice as much traffic as host1, and host3. While with a weight of 6, host3.sielestest.com can deal traffic which is three times as host2 and six times as much as host1.

- IP hash:- The IP hash directive allows servers to respond to requests according to their IP address. When using this directive all requests from a particular client will always connect to the same server. If that particular server goes down, further requests of this client will be transferred to another server and now onwards all the requests from that client will be served by new server itself.

upstream backend {

ip_hash;

server host1.xielestest.com;

server host2.xielestest.com;

server host3.xielestest.com down;

}

- Max Fails:- Nginx load balancer normally distributes load in round robin fashion and whenever a server fails, the load balancer still route request to down nodes. “Max fails” directive can be used to overcome this issue. Max fail is the maximum number of failed attempts to connect to the node before it is labelled as “down” or “inactive”.

- Fail Time out:- Fail time out directive is normally used in combination with “max_fails”, the maximum time the the server is considered inoperative. Once fall_timeout time expires, a new connections attempt starts.

Pros of using Nginx

+ SSL enabled websites can be handled.

+ No single point of failure issues.

Hardware Load Balancers

Hardware load balancers are devices or components that are used to split network load across multiple servers. Network Load balancing devices are included in hardware load balancers. Normally network traffic is send to a shared IP or listening IP and this particular IP is an address attached to the load balancer. The load balancer device, on receiving a request to particular IP, decides where to send that request. This decision making is done with “load balancing controller algorithm/strategy” inside the load balancing device. Depending up on the algorithm being used request is sent to the corresponding server and the server handles this request. The type of device determines the response to be made either back to the load balancer or to the end user. The normal load balancing strategies used in hardware load balancers are:

- Round robin algorithm

- Least number of connections

- Weighted

- Fast Response time

- Server agent

Pros and Cons of Hardware Load Balancers

+Hardware load balancers overcomes the trouble mentioned while applying round robin algorithm by using a solution through virtual IP addresses. It shows a virtual IP address to the outside world, which maps to addresses of each machine in the group.

– High cost devices

– Complexity

– Single point failure

To summarize, irrespective of a Single point failure, a load balancer can greatly help to achieve higher levels of fault tolerance for your applications. Obviously, this era of load balancers are making improvements in performance, scalability and features day by day.