Today, no matter what the purpose, we give utmost care and importance to our precious data. Data loss is a common nightmare for you and me alike. When the loss is of a database for which you’ve spent a good amount of man-hours and resources, it would be heartbreaking. If this is a matter of concern for you as well, its high time you think of implementing a cluster. MariaDB Galera Cluster!!!

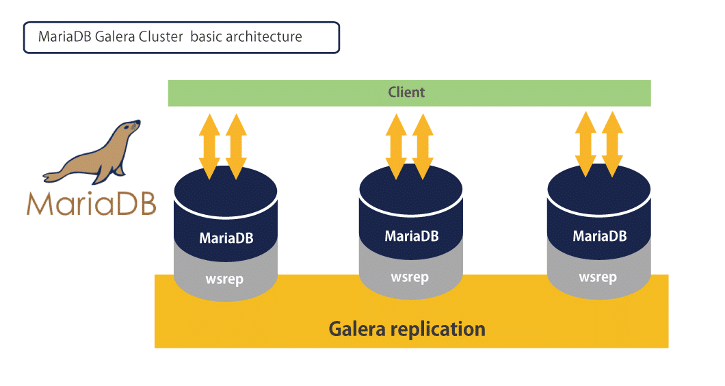

“MariaDB Galera Cluster is a Multimaster, easy-to-use, high-availability solution, which provides high system uptime, scalability, no data loss and is based on synchronous replication.” Now that’s music to my ears.

I guess you’ve noticed the term ‘Multimaster’ above. While master-slave replication is handy and commonly used, it’s not as flexible as a master-master replication. In a master-master replication setup, each node can accept changes and immediately replicate them across all nodes.

A minimal Galera cluster would consist 3 nodes. Why? It’s because, should there be a problem applying a change on one node (e.g., network problem, machine becomes unresponsive etc.), the two other nodes will have a quorum (i.e. a majority) and will be able to proceed with the commit.

Pros and cons

Lets have a look at the pros first:

- A high availability solution with synchronous replication, failover and resynchronization

- No loss of data

- All servers have up-to-date data (no slave lag)

- Read scalability

- ‘Pretty good’ write scalability

- High availability across data centers

And then there are the limitations:

- It supports only InnoDB

- With increasing number of writeable masters, the transaction rollback rate may increase, especially if there is write contention on the same dataset. This increases transaction latency.

- It is possible for a slow/overloaded master node to affect performance of the Galera Replication cluster, therefore it is recommended to have uniform servers across the cluster.

Well, now that you’ve a clear picture of what’s Mariadb Galera cluster, let’s see how this can be setup.

Install MariaDB with Galera Patches

Get a nice cup of coffee and maybe a cookie for deciding to read ahead. If that’s done, let’s get cracking.

We’re setting up 3 nodes (node01, node02, node03) with one interface each. Let’s assume the following IP addresses:

172.16.8.4

172.16.8.5

172.16.8.6

The packages we need on all the nodes are:

- rsync

- galera

- mariadb-galera-server

let’s start by adding the MariaDB Repositories first.

For Debian and Debian-based Linux distributions, you first need to install the Software Properties. The package names vary depending on your distribution. For Debian, run the following commands:

apt-get install python-software-properties apt-key adv --recv-keys --keyserver keyserver.ubuntu.com 0xcbcb082a1bb943db add-apt-repository 'deb http://mirror3.layerjet.com/mariadb/repo/5.5/debian wheezy main' apt-get update

For Ubuntu or a distribution that derives from Ubuntu, run:

apt-get install python-software-properties apt-key adv --recv-keys --keyserver keyserver.ubuntu.com 0xcbcb082a1bb943db add-apt-repository 'deb http://mirror3.layerjet.com/mariadb/repo/5.5/ubuntu precise main' apt-get update

Now that thats done, lets install the packages with a single blow:

DEBIAN_FRONTEND=noninteractive apt-get install -y rsync galera mariadb-galera-server

Voila, you now have a running MariaDB server on all nodes. However, none of them are configured to run as a node.

Configuring Galera

Implement the following configuration on all the nodes. We can use a separate configuration file /etc/mysql/conf.d/galera.cnf with the settings as (dont forget to change the IPs):

[mysqld]#mysql settings binlog_format=ROW default-storage-engine=innodb innodb_autoinc_lock_mode=2 query_cache_size=0 query_cache_type=0 bind-address=0.0.0.0 #galera settings wsrep_provider=/usr/lib/galera/libgalera_smm.so wsrep_cluster_name="my_cluster" wsrep_cluster_address="gcomm://172.16.8.5,172.16.8.6,172.16.8.4" wsrep_sst_method=rsync

Start your engines

we need to stop mysqld on all nodes first.

node01# service mysql stopnode02# service mysql stop node03# service mysql stop

then start mysqld in the first node

node01# service mysql start --wsrep-new-cluster

Let's check the cluster size status

node01# mysql -u root -e 'SELECT VARIABLE_VALUE as "cluster size" FROM INFORMATION_SCHEMA.GLOBAL_STATUS WHERE VARIABLE_NAME="wsrep_cluster_size"'

If you see the cluster size as '1', great!

We just need to start the other nodes and let them join the cluster.

node2# service mysql start[ ok ] Starting MariaDB database server: mysqld . . . . . . . . . .. [info] Checking for corrupt, not cleanly closed and upgrade needing tables.. node01:/home/debian# ERROR 1045 (28000): Access denied for user 'debian-sys-maint'@'localhost' (using password: YES)node3# service mysql start[ ok ] Starting MariaDB database server: mysqld . . . . . . . . . .. [info] Checking for corrupt, not cleanly closed and upgrade needing tables.. node03:/home/debian# ERROR 1045 (28000): Access denied for user 'debian-sys-maint'@'localhost' (using password: YES)

Ignore the above errors for now.

Let’s quickly check the cluster size status once again. (as this is a cluster, you should get the same result when you check this on any of the nodes)

node03# mysql -u root -e 'SELECT VARIABLE_VALUE as "cluster size" FROM INFORMATION_SCHEMA.GLOBAL_STATUS WHERE VARIABLE_NAME="wsrep_cluster_size"'

If you got '3' as result, nice!!!

The final step is to fix the errors we saw while starting mysqld in node2 and node3.

As we had copied over the data from node01 to node2 and node3, there's mismatch of credentials mentioned in /etc/mysql/debian.cnf on both the nodes. This will also prevent you from stopping the mysqld daemon.

This can be easily fixed by copying /etc/mysql/debian.cnf from node1 to the other nodes

Done till this?

Get yourself another cookie, you've now completed creating your MariaDB Galera Cluster.

Now all you need to do is destroy the servers and start from scratch.

Just kidding!!! Enjoy your cluster and have fun. Adios.